Trust Me, I’m a Chatbot

How AI Builds Fake Law

Long before machines got into the game, people already trusted bad law. Prosecutors spin; police reports “remember”; judges sometimes invent rules from thin air. And don’t get me started on jailhouse “lawyers.”

Lately, my clients are turning to a new player: artificial intelligence, confidently churning out artificial law. This artificial law looks official, sounds official, feels official. But far too often, it isn’t.

Explaining this to my clients isn’t always easy. And now I know I’m not alone with this problem.

A recent University of Southampton study found that people were more likely to trust legal advice written by ChatGPT than by a real lawyer — especially when they didn’t know which was which. Labels helped less than you’d think. Even when readers knew the source, they still gave the machine’s advice as much weight as the human’s. As much weight, with none of the responsibility.

That’s not just curious; it’s dangerous.

Chatbots may be artificial. The consequences, however, are very real.

Nobody pauses a hearing because “the AI said I could.” Nobody blames the software when a fake case or fabricated statute shows up in a filing. The human in the equation (likely your attorney if he was dumb enough to trust AI’s answer on your say-so) pays when things go awry.

When you rely on artificial law (unless it was a judge pulling it out of his…uh…hmmm…), the costs are real.

The Seduction of Certainty

Why do people trust a chatbot over a lawyer? The Southampton team gives us a clue: the model writes with complexity and confidence. And, they say, lawyers write simply, though at greater length.

Complexity over simplicity. Confidence over caution.

You would think the simplicity would favor the lawyer. We’re taught plain English. We don’t spout Latin that nobody understands where there are perfectly normal English words because we want our arguments to be easily understood. And the hedge-maze footnotes? We might use them in an actual legal brief but not when explaining something, even in a written memo, to our clients. (Yes, I sometimes provide written memos to clients. It’s quite rare, since I don’t want them to be left lying around for anyone to see — clients aren’t always careful — but if a client is having a particularly difficult time “getting” something, I do it.)

But in blind tests, according to the Southampton study, readers preferred the machine. The “complex” tone read as authority; the lawyer’s clarity read as…ordinary. Authority over ordinary! All hail AI, Esq.!

I see the same bias in court. A prosecutor offers a shaky rule with a preacher’s cadence and confidence, and jurors nod. Meanwhile the defense explains the statute, exposes the flaw, shows the contradiction and this makes him — or her (not trying to be sexist here, but I am writing from my own point of view!) — look hesitant. Explanation looks like doubt.

Doubt loses to confidence.

That’s how the AI wins the trust game. It doesn’t pause — or, if it does, it’s a temporary computer glitch; not a lack of confidence. AI doesn’t hedge. AI doesn’t weigh nuance. It delivers answers the way a closing argument delivers certainty.

But certainty is cheap; accountability is expensive. Or, because I love alliteration — in case you haven’t noticed — cheap certainty, costly consequences.

Lawyers and the Language Trap

Here’s the thing: the problem isn’t polish; it’s our reaction to polish. Plain writing sometimes strips away drama, adds context, and inserts qualifiers. Unlike AI’s confident cacophony, a good lawyers plain explanation appears to hedge, hem, and haw. Because what real counsel does is explain risk. And, as I said, to a lay reader explanation can feel like hedging. Hedging looks weak; an unblinking, unwavering performance looks strong. That’s the trap. The weak lawyer discussing possibilities, alternatives, and nuances against a wordy but unwavering Wunderbot.

Clients show me their phones. (Or their folders of print-outs they’ve marked up with all the highlighters of the rainbow!) “Google says….” “ChatGPT told me….” The bot gave them a short, declarative, confident answer. What I give them is layered, cautious, accountable, filled with lots of “it depends” clauses.

Answers versus advocacy. Syntax versus strategy. Output versus obligation.

The only alluring parts are in the affected alliteration.

I may be having a little fun playing with words here, but the gap between style and substance when discussing the legal niceties — or not-so-niceties — of a case with my client is the language trap. AI can mimic authority because it bears no responsibility. It has no sense of responsibility. After all, it’s not a living, thinking, reasoning thing.

Lawyers are. And lawyers do both bear and sense their responsibility to their clients. We carry the messy weight of outcomes. Chatbots are no weightlifters.

When Machines Invent the Rules

I’ve said this readiness to trust a chatbot over a real lawyer is dangerous.

The danger isn’t just that people like how the answers sound. The real danger is that the answers often aren’t law at all. They’re made up answers. Machine-stitched sentences that look authoritative. And in some cases, because of the obsequiousness built into LLMs, they may just be the AI telling the questioning client (I do love alliteration) what the client wanted to hear. (Or read.)

Ask about how to get more lenient sentencing and get a confident citation to a case that never existed. Ask about bail and get a crisp “rule” no judge has imposed. As to bail, by the way, this is particularly difficult because of the tension since Humphrey between higher courts offering constitutionally-driven critiques of lower courts and those lower courts’ resistance to releasing people over fear of something going wrong and impacting their re-electability.

But to an untrained eye, AI’s answer looks like law. It’s not law — it’s a mirror. Plausible on the surface, hollow inside. Surface without substance.

People carry those mirrors into police interviews, my office, and sometimes persist in trying to get in their own word in court. But when the mirror shatters, the bot doesn’t bleed. If I can’t find a way to take the mirror away, and it shatters in court, my clients will bleed.

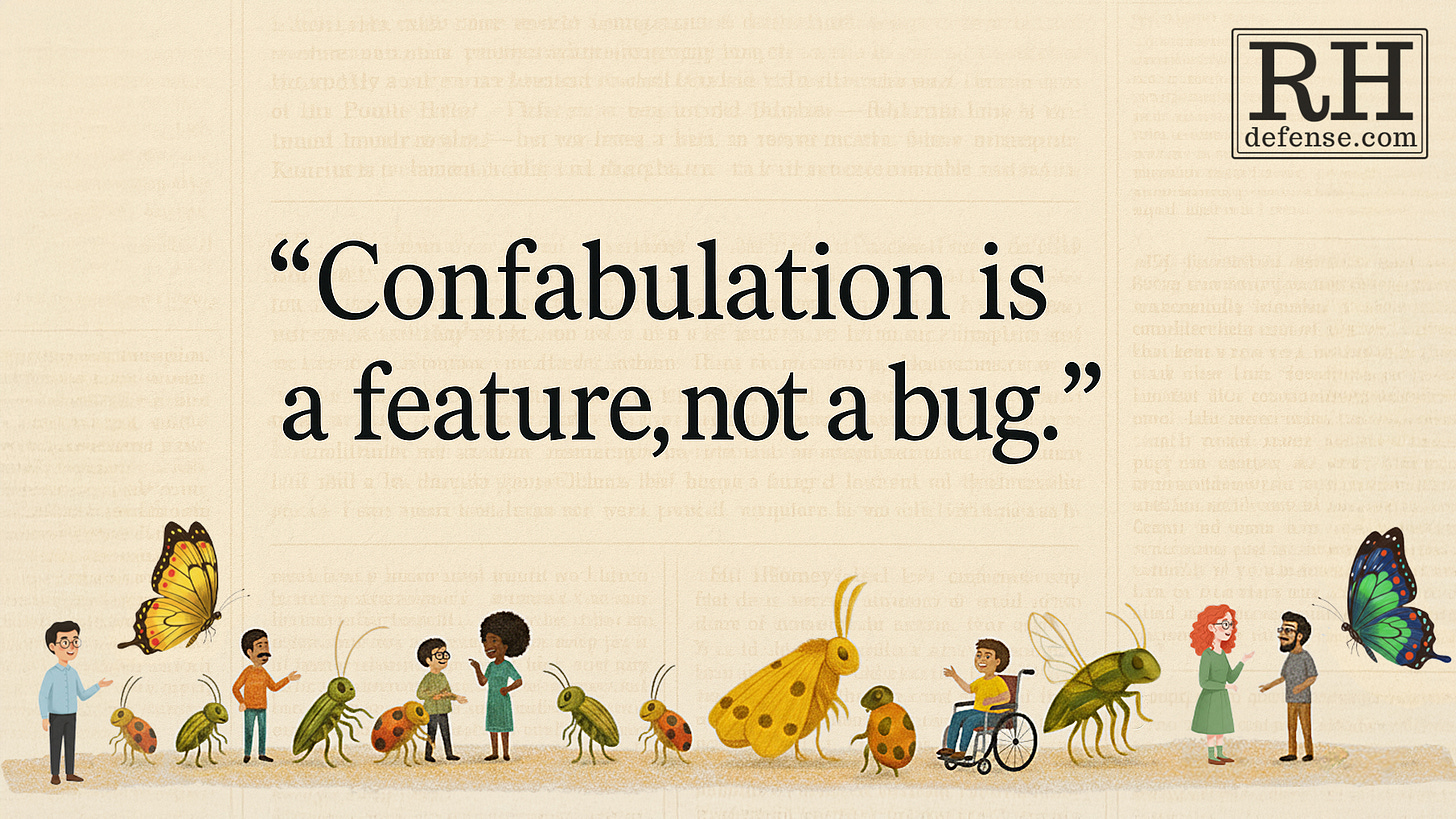

Confabulation as a Feature, Not a Bug

Technologists like to call these errors “hallucinations”. That’s the wrong metaphor. Hallucinations are false perceptions; but language models don’t perceive. What they do is closer to confabulation: filling gaps with stories that sound right even when they’re false. It’s not a rare glitch. It’s an expected behavior because it’s how they work in the first place. It’s not an aberration — it’s a feature.

Predictive systems (and that’s what AI is, especially LLMs) earn their keep by anticipating what comes next. I’ve argued with some who think I’m being too simplistic when I say this, but they’re like colossal autocorrect cobblers, crafting clueless compositions. But the same machinery that generates fluent summaries also generates fluent fabrications. Build a next-word engine, and you’ll get next-word law. Sometimes it will be correct; often it’s convincing; yet occasionally invented. The fluency breeds trust; but that trust invites trouble.

Naming the Problem: Confabulation, Bullshit, or Both?

So what do we even call it when machines make things up?

“Hallucination” has become the industry’s favorite label. But that word misleads. Hallucinations are false perceptions. Large language models don’t perceive. What they do is closer to confabulation — filling gaps with plausible stories that feel coherent, whether true or not.

And confabulation isn’t a rare malfunction. It’s the default. Elizabeth Loftus’s work on memory showed this decades ago: people don’t store facts like files. We store fragments and stitch them together. When we tell a story — when we construct a memory — we’re always confabulating. Sometimes we do so accurately; sometimes not.

The neuroscience lines up. In the 1980s, Benjamin Libet wanted to explore how people decide to do things and when they actually “know” they’ve decided to do something. Libet asked participants in the study to flex a wrist or finger whenever they felt like it.

Somewhat surprisingly, Libet found the brain’s “readiness potential” firing hundreds of milliseconds before the study’s participants reported deciding to act. Later work by Patrick Haggard and John-Dylan Haynes extended the gap to seconds.

Conscious choice, it turns out, may just be a story the brain tells after the fact. Which means confabulation isn’t the exception. It’s the rule.

As I argued in an earlier blog post, Ghosts in the Machine, Daniel Dennett’s “War and Peace” analogy makes the same point at a larger scale.

Britain and the United States signed the Treaty of Ghent in December 1814, ending the War of 1812. But two weeks later the Battle of New Orleans raged on, because the soldiers hadn’t gotten the message.

So when, Dennett asked, did Great Britain know it was at peace? There is no single moment. Knowledge in a system like an empire — or a brain — and, I believe, an AI system like an LLM (chatbot) — is distributed, delayed, reconstructed. Consciousness, Dennett argued, is no different. There’s no central theater. Just multiple drafts stitched together after the fact.

That’s why I prefer confabulation, rather than hallucination as the correct way to describe what AI systems do.

It captures the mechanism and reminds us this isn’t an occasional glitch but the background hum of predictive systems. In fact, I’ve come to believe confabulation and predictive processing are inseparable. Just because most confabulations line up with reality doesn’t mean they’re not confabulations. (Prelude to a “coming attraction”: this is the focus of my next Substack post.)

Even the word — confabulation — hints at the point. Ironically, the word derives from the Latin (see? sometimes I do use Latin!) word “confabulari”. And the word originally meant “to gossip, chat, or discuss something with somebody”.

The word ‘fabula,’ contained in confabulation, primarily meant a chat, dialog or tattle with people, but it also meant the saga, fairy tale, and fiction.

— Confabulation, in Encyclopedia of Human Nutrition (3d ed. 2013)

M.H. Abrams wrote about “fabulation” in literature.

[Such novels] violate, in various ways, standard novelistic expectations by drastic—and sometimes highly effective—experiments with subject matter, form, style, temporal sequence, and fusions of the everyday, the fantastic, the mythical, and the nightmarish, in renderings that blur traditional distinctions between what is serious or trivial, horrible or ludicrous, tragic or comic.

— M.H. Abrams, A Glossary of Literary Terms 204 (8th ed. 2005) (with Geoffrey Galt Harpham)

Confabulation works the same way. Sometimes the experiment is absurd, laughable, even nightmarish. But sometimes it’s highly effective — producing answers that look not just plausible but persuasive, even accurate. That doesn’t make it any less confabulation. It just shows why we so readily buy it.

Some philosophers, including Joe Slater and his colleagues, prefer a blunter term: bullshit. Their argument is that hallucination and confabulation describe inner processes, while what misleads us is the communicative act. Bullshit, in Harry Frankfurt’s sense, is indifferent to truth or falsity — concerned only with effect.

I don’t fully agree with their rhetoric, for reasons I’ll go more deeply into with my next Substack article. (Maybe a reason to subscribe???)

But even if I did agree, as a lawyer I can’t drop “bullshit” into every legal discussion or brief I write that might discuss AI.

More importantly, I think confabulation gets us closer to the heart of what both brains and machines are doing. As Peiqi Sui, et al, put it:

We believe the mental picture that LLMs hallucinate because they are untrustworthy, unfaithful, and ultimately unhumanlike is an oversimplified one. Instead, they confabulate and exhibit narrative-rich behavioral patterns closely resembling the human urge of storytelling — perhaps hallucinations make them more like us than we would like to admit.

— Peiqi Sui et al., Confabulation: The Surprising Value of Large Language Model Hallucinations, in Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL 2024) 14280, 14286 (2024)

In any event, call it confabulation, call it bullshit, or call it both — it isn’t counsel. Not judgment, not loyalty, not someone willing to stand beside you when the consequences hit. It doesn’t think. It has no empathy. It doesn’t have the drive to fight for you that a real lawyer has.

Trusting the Wrong Game

I’ve described all of this as a “trap” for those who engage in the game.

And game theory explains the trap.

Back in the 1980s, political scientist Robert Axelrod ran computer tournaments to test strategies for the repeated prisoner’s dilemma. Dozens of entrants submitted programs designed to outsmart the others. The surprising winner wasn’t the most complex or sneaky — it was the simplest: tit-for-tat. Start by cooperating. If the other side defects, punish once. If they return to cooperation, forgive and cooperate again. That rhythm — nice, retaliatory, forgiving, and clear — turned out to be unbeatable. Cooperation survives only when it’s reciprocal. Tit-for-tat starts nice, punishes defection, then returns to cooperation when cooperation returns. Reciprocity or ruin.

This worked because, as Axelrod explains:

What makes it possible for cooperation to emerge is the fact that the players might meet again. This possibility means that the choices made today not only determine the outcome of this move, but can also influence the later choices of the players. The future can therefore cast a shadow back upon the present and thereby affect the current strategic situation.

— Robert Axelrod, The Evolution of Cooperation 12 (1984)

People treat chatbot answers as if they’re part of a reciprocal exchange with a real person. And not just that, but with a person with whom there is a shadow of a future that is cast back upon the relationship.

I ask, it answers, therefore I can rely.

But software doesn’t reciprocate. No shared costs. No risk. No consequences. It exploits the appearance of reciprocity without giving anything back. Appearance without reciprocity.

That’s the civility trap in digital form: one side keeps playing fair; the other “wins” by looking cooperative. Looking cooperative, not being accountable.

It’s not accountable because it’s not really a person. There is no “future shadow” because LLMs don’t have that kind of persistent memory. Though you could be forgiven for believing otherwise, based on how a chatbot communicates, there is no relationship.

But hire a human attorney? BOOM! There’s your relationship. There’s your current strategic situation and your future shadow that will cast back upon the relationship.

Social Reasoning—and Its Limits

Behavioral-game studies tell the same story from another angle. Out of the box, top models excel at cold logic — spotting selfish moves, retaliating instantly, playing hard tit-for-tat.

They struggle with the long game: building trust, compromising, sacrificing short-term gain for mutual benefit. Trust is costly; models are costless.

If you nudge them to consider the other player’s perspective (so-called social chain-of-thought), they can act more cooperative. But that's simulation, not verisimilitude. And chatbots have no stake in the outcome of your situation. To quote the notoriously difficult — but here entirely apropos Jean Baudrillard:

By crossing into a space whose curvature is no longer that of the real, nor that of truth, the era of simulation is inaugurated by a liquidation of all referentials - worse: with their artificial resurrection in the systems of signs, a material more malleable than meaning, in that it lends itself to all systems of equivalences, to all binary oppositions, to all combinatory algebra. It is no longer a question of imitation, nor duplication, nor even parody. It is a question of substituting the signs of the real for the real, that is to say of an operation of deterring every real process via its operational double, a programmatic, metastable, perfectly descriptive machine that offers all the signs of the real and shortcircuits all its vicissitudes.

— Jean Baudrillard, Simulacra and Simulation 2 (Sheila Faria Glaser trans., Univ. of Mich. Press 1994) (1981)

The behavior changes because the prompt changed — not because the system now carries the cost of being wrong.

Artificial Counsel vs. the Right to Counsel

The Constitution doesn’t guarantee answers. It guarantees counsel.

Counsel is judgment, presence, loyalty. The person who stands with you in front of a jury. The person who hopefully understands how this judge hears that phrase. The person who will take heat to challenge a precedent that needs to be challenged. Not chatbot text — adversarial advocacy.

A chatbot can hand you a statute. It cannot weigh tradeoffs, smell a poisoned plea, or anticipate how a witness will break under cross. It cannot stand between you and the state when the stakes are highest. When the stakes go up in the courtroom, outputs don’t stand — human lawyers do.

Clients who confuse artificial answers with real advocacy make the mistake game theory warns against: cooperating in a game where the other side will never reciprocate. ChatGPT cannot reciprocate.

That’s not a failure of technology. It’s not as if the bot decided not to reciprocate. It’s simply not able to do so. It’s not a part of its programming. The model pays no price for error. There is no future shadow casting itself back on your current chat session.

But human lawyers. They have what’s necessary for reciprocity. And they have skin in the game. At least, the good ones do. Not only do most lawyers hate to lose on principle, but good lawyers hate to lose their clients cause because of the consequences to those clients.

That’s just how we’re built. We are programmed for reciprocal relationships. We are programmed to have a stake.

Some of us are even programmed to drive it into the heart of the prosecution’s case.

The Cost of Artificial Law

The lesson is simple. People trust fluency and confidence. Machines supply both. Fluency plus confidence equals artificial law — persuasive, plausible, but with a great potential to be false. Persuasive enough to act on; false enough to harm.

A real live human lawyer will use the tool to frame questions, surface sources, sketch options. A real live human lawyer can do this because a real live human lawyer has spent years studying — and then more years gaining reflective experience on — how to frame questions, how to surface sources, and how to sketch options.

But when a real live human lawyer uses an AI tool, we don’t mistake it for law, and we don’t mistake it for counsel. We verify. Confirm. Cross-check. Answers are cheap; accountability is dear. We know the chatbot will never pay the price, nor mourn the mess it’s made.

When the system bears down, you won’t want artificial law at your side. You’ll want someone who can fight back, defect when defection is required, and carry the cost of being wrong. Tit-for-tat.

Not just words — a witness to your defense.

Justice doesn’t come from how confidently something is said. (Hell, the most ardent liars are often confident cops!) It comes from who will stand beside you when everything else is against you.

I’m not talking about confidence. Confidence is nice, but it’s not always available.

What I am talking about is courage.

That’s another thing chatbots lack.

Key Takeaways:

People trust confidence more than competence. Chatbots sound certain; lawyers sound cautious. Guess which one feels more convincing.

“Hallucination” isn’t the right word. What AI does is confabulate — weaving together words that feel true whether they are or not. That’s not a glitch; it’s how they work.

Humans confabulate, too. Memory isn’t a filing cabinet — it’s a storyteller. But when a witness does it, we cross-examine. When a machine does it, too many people just nod.

Trust is built on reciprocity. Game theory shows cooperation survives only when both sides share risk. AI doesn’t share risk. No consequences. No skin in the game.

The Constitution guarantees counsel, not chatbot answers. Counsel means judgment, loyalty, courage — the person who stands next to you when the stakes are high.

Fluency without accountability isn’t law. It’s just language. And language alone won’t keep you out of prison. Especially if it’s the wrong language.

My clients have been sharing the fruits of their AI law degree with increasing frequency. Do they know I charge them for every analysis of their research and then writing an email or memo telling them why the research is all wrong?!